Guidance of diffusion model¶

- Classifier Guidance: https://arxiv.org/abs/2105.05233

- openai

- Prafulla Dhariwal & Alex Nichol

-

- Tim Salimans

- Generative Adversarial Networks (GANs) Improvements

- Developed techniques to improve GAN training stability

- Introduced semi-supervised learning with GANs

- Proposed Inception Score for evaluating generative models

- Autoregressive Models

- Worked on PixelCNN++ and GPT-1

- Contributed to sequence modeling advancements

- Variational Autoencoders (VAEs)

- Research in probabilistic generative modeling

- Explored methods to improve sample quality and latent space learning

- Diffusion Models & Acceleration

- Contributed to Imagen (Google's high-quality text-to-image model)

- Focused on Imagen Video for video generation

- Developed distillation techniques to accelerate diffusion model inference

- Generative Adversarial Networks (GANs) Improvements

Classifier Free Guidance: https://arxiv.org/pdf/2207.12598

- Jonathan Ho

- Denoising Diffusion Probabilistic Models (DDPM)

- Introduced a novel approach to generative modeling

- Stepwise noise addition and removal for high-quality image synthesis

- Established the foundation for modern diffusion models

- Diffusion Models

- Basis for state-of-the-art generative AI research

- Inspired numerous advancements in image and video generation

- Google Research

- Works as a research scientist

- Focused on AI, deep learning, and probabilistic generative models

- Denoising Diffusion Probabilistic Models (DDPM)

1. Classifier Guidance¶

Classifier guidance in diffusion models introduces an auxiliary classifier \( p_\theta(c | z_\lambda) \) that adjusts the score function \( \epsilon_\theta(z_\lambda, c) \) by incorporating the gradient of the log-likelihood of the classifier. This results in modifying the sampling process to encourage the generation of samples that the classifier confidently associates with the target class \( c \).

1.1 Background: Score Function in Diffusion Models¶

Diffusion models rely on score-based sampling, where the denoising function \( \epsilon_\theta(z_\lambda, c) \) predicts noise at each timestep \( \lambda \) in the reverse diffusion process. The fundamental idea is to approximate: $$ \epsilon_\theta(z_\lambda, c) \approx -\sigma_\lambda \nabla_{z_\lambda} \log p_\theta(z_\lambda | c) $$ where:

- \( z_\lambda \) is the latent variable (noisy sample at time \( \lambda \)),

- \( p_\theta(z_\lambda | c) \) is the true conditional distribution we wish to sample from,

- \( \sigma_\lambda \) is the noise level.

1.2 Classifier Guidance: Adjusting the Score Function¶

Instead of relying purely on the diffusion model's learned score, we introduce an auxiliary classifier \( p_\theta(c | z_\lambda) \) that provides an additional gradient term. This is done by reweighting the gradient of the log-likelihood of the classifier.

The modified score function incorporates this additional term:

Since we know from Bayes' rule:

taking the gradient with respect to \( z_\lambda \) gives:

Thus, the modified score function can be rewritten as:

This means that the diffusion model now upweights regions of the sample space where the classifier assigns high confidence to the target class.

1.3 Classifier-Guided Sampling Distribution¶

By modifying the score function, we change the implicit generative distribution. The resulting conditional sampling distribution follows:

- The term \( p_\theta(c | z_\lambda)^w \) acts as a reweighting factor, increasing the probability of samples where the classifier confidently predicts class \( c \).

- As \( w \to 0 \), the model behaves like a standard conditional diffusion model.

- As \( w \to \infty \), the distribution concentrates on a small region of high-classifier-confidence samples, leading to better Inception Scores but reduced diversity.

1.4 Comparing Classifier Guidance for Conditional vs. Unconditional Models¶

We can analyze the effect of applying classifier guidance to an unconditional model. If we start with an unconditional prior:

which shows that applying classifier guidance with weight \( w + 1 \) to an unconditional model should theoretically match applying guidance with weight \( w \) to a conditional model.

However, in practice:

- Best results are obtained when classifier guidance is applied to an already class-conditional model.

- This suggests that learning class-conditioned priors during training is beneficial, even when applying guidance at inference time.

1.5 Summary of Classifier Guidance in Diffusion Models¶

1.5.1 Key Equations¶

- Classifier-Guided Score Function

$$ \tilde{\epsilon}\theta(z\lambda, c) = -\sigma_\lambda \nabla_{z_\lambda} \left[ \log p_\theta(z_\lambda | c) + w \log p_\theta(c | z_\lambda) \right]. $$

- Modified Sampling Distribution

$$ \tilde{p}\theta(z\lambda | c) \propto p_\theta(z_\lambda | c) p_\theta(c | z_\lambda)^w. $$

- Effect of Increasing Guidance Strength \( w \):

- Higher \( w \) → Better class consistency, improved Inception Score.

- Higher \( w \) → Lower diversity, risk of mode collapse.

- Using classifier guidance on a conditional model performs better than using it on an unconditional model.

1.5.2 Practical Implications:¶

- Tuning \( w \) is crucial: setting it too high leads to overfitting to classifier decisions.

- Using classifier guidance with a conditional diffusion model is empirically superior to using it with an unconditional model.

This derivation provides the theoretical foundation for classifier-guided diffusion models and explains how they bias generation towards perceptually better samples while balancing diversity. 🚀

2. Classifier Free Guidance¶

Instead of having an explicit conditional score function

where the gradient is taken with respect to \(z_\lambda\),

let's see if we can find an implicit representation of the conditional score function.

We want to express the gradient of the log-likelihood of the classifier \( p_\theta(c | z_\lambda) \) in terms of the gradients of the unconditional log-likelihood \( p_\theta(z_\lambda) \) and the conditional log-likelihood \( p_\theta(z_\lambda | c) \).

Using Bayes' theorem, we express the classifier probability \( p_\theta(c | z_\lambda) \) as:

Taking the logarithm:

Now, we differentiate both sides with respect to \( z_\lambda \):

where:

- \( \nabla_{z_\lambda} \log p_\theta(z_\lambda | c) \) is the conditional score function.

- \( \nabla_{z_\lambda} \log p_\theta(z_\lambda) \) is the unconditional score function.

Thus, we have derived:

Interpretation

- This equation shows that the gradient of the classifier's log-likelihood can be interpreted as the difference between the conditional and unconditional score functions.

- This explains why classifier guidance modifies the score function in diffusion models—by shifting the unconditional score towards the class-conditional score.

In Classifier-Guided Diffusion Models, we modify the score function:

Substituting our derived equation:

which simplifies to:

This is exactly the Classifier-Free Guidance (CFG) formula, showing that CFG implicitly approximates classifier guidance without explicitly computing classifier gradients.

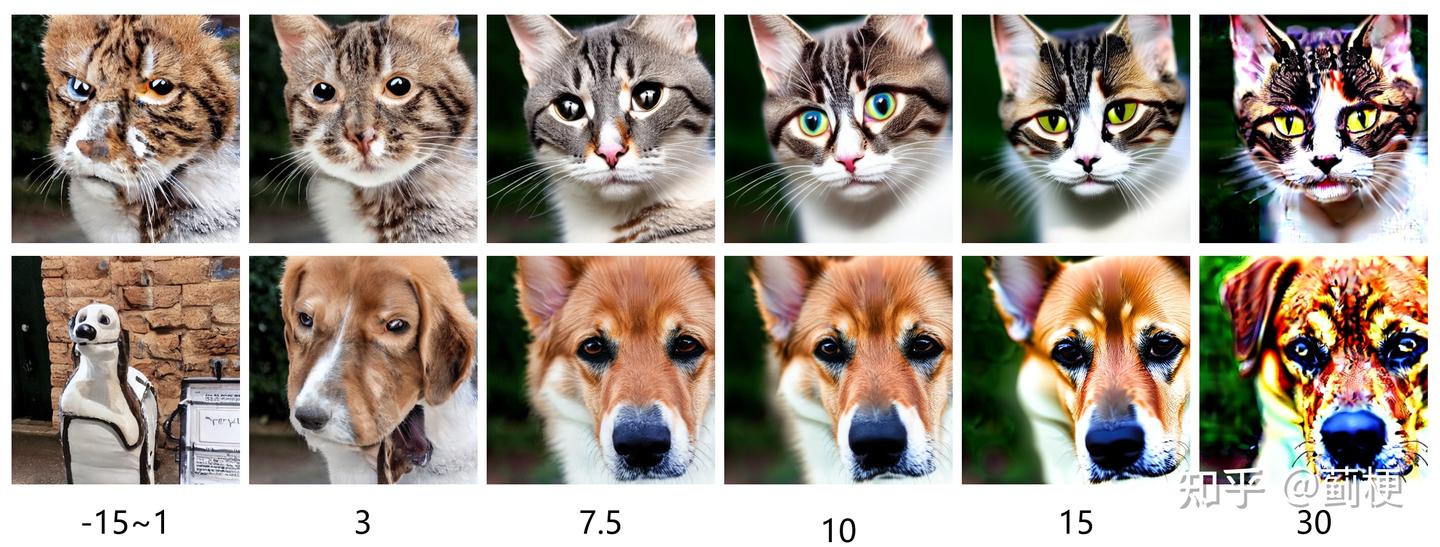

Here we have an experiment on the guidance scale. When the value of \(w\) increases, the model follows the prompt more tightly. However, if it is too large, this will result in poor quality.

twice forward

For the classifier free guidance algorithm, it takes two forward each step which may be not that efficiency. This can be optimized

💬 Comments Share your thoughts!